Why Using QEMU Directly on an Orange Pi 5+ or Raspberry Pi5b with Armbian is More Efficient than Installing a Hypervisor

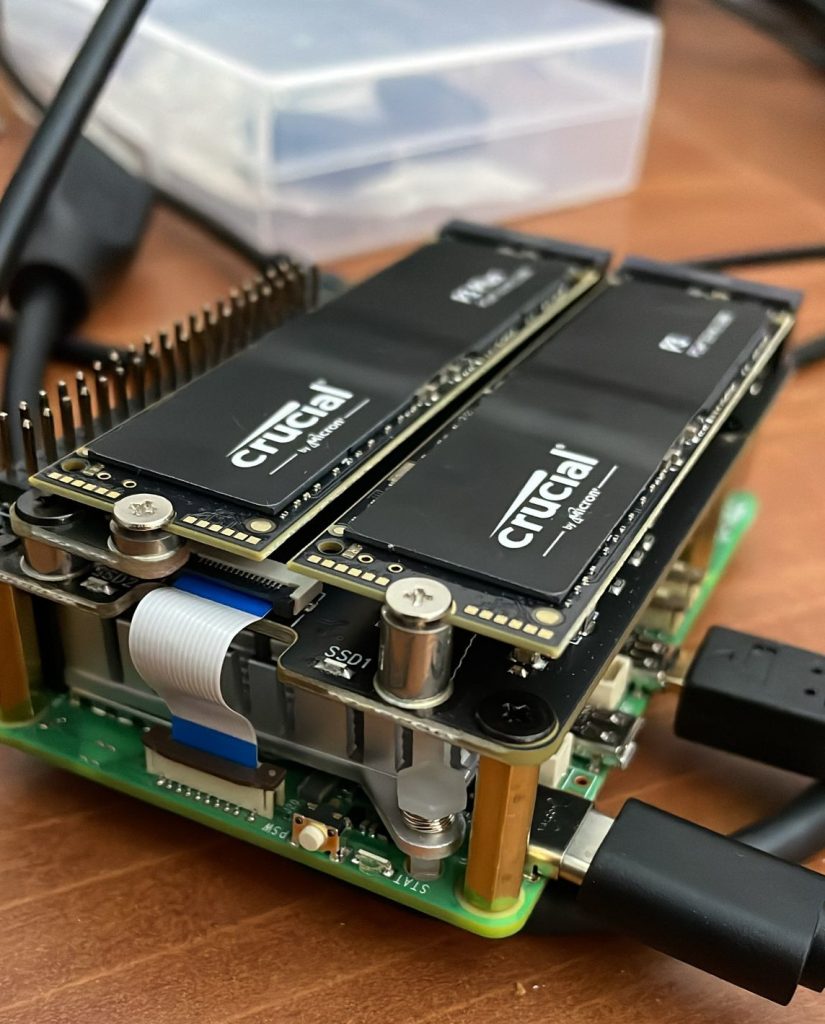

(photo: Raspberry Pi5b + Geekworm X1004 Dual M.2 NVMe SSD Shield PCIe)

(photo: Raspberry Pi5b + Geekworm X1004 Dual M.2 NVMe SSD Shield PCIe)

When it comes to virtualizing a system like FreeBSD on an Orange Pi 5+ running Armbian, efficiency and resource optimization are critical, especially with limited hardware specs like 16 GB of RAM and 8 CPU cores (or 8 GB of RAM and 4 CPU for Raspberry Pi 5). Instead of installing a full hypervisor like Proxmox, a QEMU-based solution is much more suitable to fully utilize the available hardware. Here’s why using QEMU directly is more efficient in this scenario.

1. Maximizing Limited Resources

The Orange Pi 5+ has 16 GB of RAM and 8 CPU cores, which is enough to run a single VM like FreeBSD but not necessarily enough to handle the overhead of a full hypervisor like Proxmox. Hypervisors introduce additional management layers that consume both memory and CPU for interfaces, virtualization services, and other features that may not be needed for such a specific use case.

QEMU allows you to manage the virtual machine directly without unnecessary intermediaries, letting you allocate all available RAM and all CPU cores to the VM without having to sacrifice resources for extra services.

2. Simplicity and Lightness

Hypervisors like Proxmox are designed for complex infrastructures, offering features like clustering, high availability, and multiple VM management. These features are overkill on an Orange Pi 5+ that doesn’t need such advanced capabilities. QEMU, on the other hand, can be launched with a simple command, offering a lightweight and minimal solution that does exactly what’s needed—run a VM.

For a single FreeBSD virtual machine, all you need is direct access via QEMU, minimizing both memory and CPU usage.

3. Better Utilization of 8 CPU Cores

The version of QEMU provided in the Armbian distribution allows full use of the 8 CPU cores on the Orange Pi 5+. Unlike a hypervisor, which may limit or reserve CPU cores for its own usage, QEMU lets you allocate all CPU resources directly to the VM, maximizing virtual machine performance.

4. RAM Optimization

With only 16 GB of RAM, every megabyte matters. A hypervisor like Proxmox uses a significant portion of memory for its own processes—network management, user interfaces, and other services. This leaves less memory available for the virtual machine itself.

With QEMU, memory consumption outside the virtual machine is minimal, allowing almost all RAM to be allocated to the VM, thereby maximizing system performance.

5. Hardware Compatibility Workaround

For example, FreeBSD doesn’t support the Orange Pi 5+ hardware well when run directly on the physical machine. Using a hypervisor to overcome this issue might seem like a good idea, but it adds an unnecessary layer of complexity. QEMU can be configured directly to virtualize the hardware in a way that FreeBSD can run smoothly without dealing with hardware compatibility issues.

6. No Superfluous Features

Hypervisors like Proxmox often come with features like snapshot management, live VM migration, and web-based management interfaces. However, in this use case, where you are running a single VM on an Orange Pi 5+, these features may be unnecessary.

QEMU doesn’t bother with these extra features. It focuses solely on running the VM efficiently, reducing complexity and resource consumption.

7. Easy Adaptability and Automation

Launching a VM with QEMU can be easily integrated into shell scripts for automating the start, stop, and management of the FreeBSD VM without the need to set up a web interface or complex APIs like those required by hypervisors. This makes managing your VM more flexible and faster.

Conclusion

On an Orange Pi 5+ running Armbian, with limited resources like 16 GB of RAM and 8 CPU cores (or worst Raspberry Pi5 8 GB of RAM and 4 CPU cores), using QEMU directly to run a FreeBSD virtual machine is much more efficient than installing a full hypervisor like Proxmox. With QEMU, you avoid unnecessary overhead, optimize resource utilization, and maintain direct control over your VM while also overcoming FreeBSD’s hardware limitations on this platform.

My script to manager a single FreeBSD VM on Orange Pi5+ with 1TB NVME and 2 USB3 SSD drives:

#!/bin/sh

QEMUBIN=/usr/bin/qemu-system-aarch64

BSDPROD="-name FreeBSD14-jcm -machine virt,accel=kvm -smp 8 -cpu host -m 10240 -enable-kvm \

-drive file=/qemu/AAVMF_CODE.fd,format=raw,if=pflash,unit=0,readonly=on \

-drive file=/qemu/AAVMF_VARS-jcm.fd,format=raw,if=pflash,unit=1 \

-drive if=virtio,file=/qemu/vm-100-disk-1-jcm.raw,format=raw \

-drive if=virtio,file=/qemu/vm-100-disk-2-jcm.raw,format=raw \

-rtc base=localtime \

-device virtio-net-pci,netdev=net0,mac=de:ad:be:ef:00:01 \

-netdev bridge,id=net0,br=br0 \

-device virtio-net-pci,netdev=net1,mac=de:ad:be:ef:fa:ce \

-netdev bridge,id=net1,br=br1 \

-uuid 2e0674f0-7ffe-4314-b9bf-deadbeef0001 \

-chardev socket,id=charchannel0,path=/var/run/qga-freebsd-jcm.sock,server=on,wait=off \

-device virtio-serial \

-device virtserialport,chardev=charchannel0,name=org.qemu.guest_agent.0 \

-monitor unix:/tmp/qemu-monitor-freebsd-jcm,server,nowait \

-chardev socket,id=charconsole,path=/tmp/qemu-jcm-tty,server=on,wait=off \

-serial chardev:charconsole \

-drive file=/dev/disk/by-id/usb-USB_3.1_0_Device_00000000545E-0:0,if=virtio,id=drive-virtio0,cache=directsync,format=raw,aio=io_uring,detect-zeroes=on \

-drive file=/dev/disk/by-id/usb-USB_3.1_1_Device_00000000545E-0:1,if=virtio,id=drive-virtio1,cache=directsync,format=raw,aio=io_uring,detect-zeroes=on \

-display none \

-daemonize"

BSDINST="-name FreeBSD14-jcm -machine virt,accel=kvm -smp 8 -cpu host -m 8192 -enable-kvm \

-drive file=/qemu/AAVMF_CODE.fd,format=raw,if=pflash,unit=0,readonly=on \

-drive file=/qemu/AAVMF_VARS-jcm.fd,format=raw,if=pflash,unit=1 \

-cdrom /qemu/FreeBSD-14.1-RELEASE-arm64-aarch64-dvd1.iso \

-drive if=none,file=/qemu/FreeBSD-14.1-RELEASE-arm64-aarch64-dvd1.iso,media=cdrom,id=cdrom0 \

-drive if=virtio,file=/qemu/vm-100-disk-1-jcm.raw,format=raw,cache=none,aio=native \

-drive if=virtio,file=/qemu/vm-100-disk-2-jcm.raw,format=raw,cache=none,aio=native \

-cpu host \

-rtc base=localtime \

-netdev bridge,id=net0,br=br0 \

-device virtio-net-pci,netdev=net0,mac=de:ad:be:ef:00:01 \

-uuid 2e0674f0-7ffe-4314-b9bf-deadbeef0001 \

-chardev socket,id=charchannel0,path=/var/run/qga-freebsd-jcm.sock,server=on,wait=off \

-device virtio-serial \

-device virtserialport,chardev=charchannel0,name=org.qemu.guest_agent.0 \

-monitor unix:/tmp/qemu-monitor-freebsd-jcm,server,nowait \

-nographic \

-no-reboot"

case "$1" in

start|faststart|quietstart)

$QEMUBIN $BSDPROD;

;;

stop|faststop|quietstop)

# arret de la VM FreeBSD (communique avec le guest-agent de la VM)

echo '{"execute":"guest-shutdown"}' | socat - UNIX-CONNECT:/var/run/qga-freebsd-jcm.sock

;;

install)

$QEMUBIN $BSDINST;

;;

console)

# console

echo 'Sortir de la console par ctrl-O:'

socat -,raw,echo=0,escape=0x0f UNIX-CONNECT:/tmp/qemu-jcm-tty

;;

debug)

# arret du qemu

# echo "quit" | socat - UNIX-CONNECT:/tmp/qemu-monitor-freebsd-jcm

socat - UNIX-CONNECT:/tmp/qemu-monitor-freebsd-jcm

;;

esac

exit 0;